Marketing Mix Modeling (MMM) is a statistical method used to measure the effectiveness of marketing activities and other factors on sales, helping you understand how your marketing efforts contribute to results – with a holistic methodology.

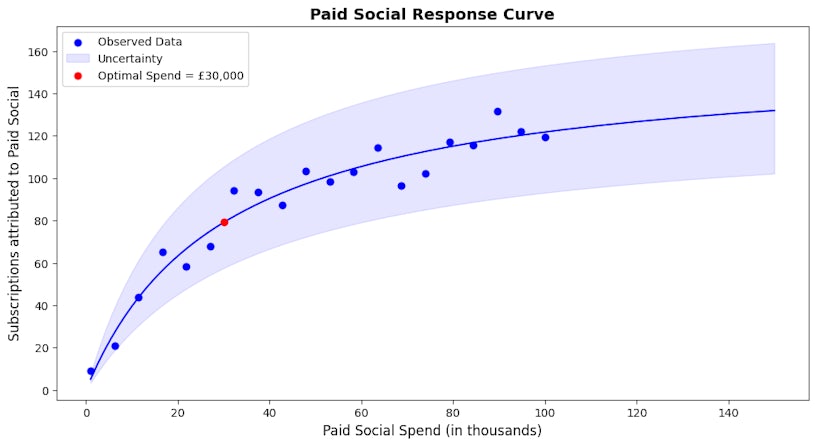

MMM enables you to optimise budget allocation by analysing the effectiveness of each marketing channel and identifying the point of diminishing returns through response curves. Response curves are used to visualise the relationship between marketing inputs and sales. For example, an MMM output might show that TV ads contribute 20% to sales but exceed saturation, while paid search drives 35% with room for growth. In this case, reducing TV ad spend and reallocating it to paid search could lead to a significant increase in overall sales. This data-driven adjustment improves your marketing mix, ensuring that your marketing spend delivers maximum impact.

Traditionally, when conducting MMM, it was best practice to split your input data into broader channels (like TV, Google ads, print or OOH) rather than dividing each channel into specific campaign types or funnel stages. This is because breaking it down too much can lead to an overly complex model which may overfit the data and become too tailored to past trends. When overfitting occurs, your MMM tends to lose its ability to generalise for future predictions, making it harder to interpret and less reliable.

Overfitting results in poor forecasting ability, making the model unreliable for future media planning. Relying on such a model increases your risk of making bad decisions, such as over-investing in ineffective channels or underfunding high-performing ones, ultimately leading to inefficient budget allocation, wasted resources, and reduced ROI on marketing campaigns.

However, as marketers, we often wish to understand the impact of specific campaigns, targeting strategies, or funnel stages within each channel. This blog will show you how to identify when your MMM may be too complex and guide you through two ways of achieving more granular insights without jeopardising model complexity. By using advanced techniques and careful aggregation, it’s possible to maintain a balance between detailed insights and a manageable, reliable model that remains easy to interpret and useful for future decision-making.

Identifying when your data is too granular

When assessing whether your MMM input data is too granular, you should consider if the level of detail being used is fit for purpose. While it’s tempting to break down data into specific campaigns, audience segments, or even individual ads, this can introduce an overwhelming number of variables into the model. The more granular the data, the more parameters the model has to estimate, increasing the likelihood that it will become overly complex and less capable of generalising beyond the dataset used for training.

As the number of parameters increases, the risk of overfitting and instability grows. You should strive to strike a balance between capturing enough detail to generate actionable insights and maintaining a model that’s simple enough to generalise well. Simplifying your input data without losing key insights can help keep the model efficient and reliable.

Finding the balance between granularity and model simplicity involves using cross-validation techniques that go beyond simple in-sample performance metrics like R². While a high in-sample R² might seem like a good sign, it often indicates that the model is overfitting, capturing noise rather than true, predictive relationships. In-sample R² only reflects how well the model explains the historical data, but it doesn’t provide insight into how well the model will generalise to unseen data.

A more robust approach is to use Leave-One-Out Cross-Validation (LOO-CV) or other cross-validation methods, which split the data into training and validation sets repeatedly to test the model’s ability to generalise. LOO-CV, in particular, helps assess how well the model performs on unseen data by leaving out one observation at a time, training the model on the rest, and then predicting the omitted data point. This technique provides a more reliable check against overfitting, ensuring that the model is capturing real patterns rather than noise.

Another valuable method is hold-out testing, where a portion of the data is set aside before training the model. After the model is built, its performance is evaluated on this hold-out set to simulate how well it would work in a real-world, future scenario. If the model performs well on the hold-out data, it’s a sign that the level of granularity and complexity is appropriate. If not, it may suggest that the model is too complex and overfitting to the training data, requiring simplification of the input data or a reduction in parameters.

Increasing granularity without added complexity

We can increase granularity without adding complexity by using techniques like incrementality testing or sub-modelling, which allow for more detailed insights without overwhelming the model.

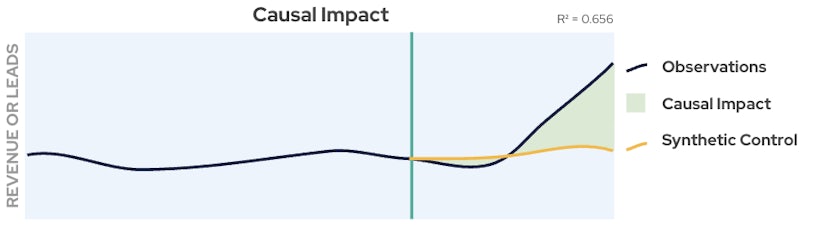

Increasing granularity with incrementality testing

After running an initial channel-level MMM, you might find that a channel like Meta ads has a high return on ad spend (ROAS), but this insight doesn’t give you specific details on which campaigns or objectives are driving that impact. To expand these results, you can use incrementality testing to break down the contribution of specific campaigns or objectives within the Meta ads channel.

For example, you could run a controlled experiment by temporarily pausing or adjusting spend on certain campaigns (or on top vs bottom-funnel efforts) to measure the change in overall performance. The difference between the baseline performance (without the specific campaign or funnel component) and the performance during the test period gives you an estimate of that campaign’s or funnel stage’s incremental impact.

Once the incrementality testing is complete, these results can be integrated into your MMM by attributing a portion of the channel’s overall contribution to the specific campaigns or funnel stages tested. For example, if the test shows that a particular retargeting campaign at the bottom of the funnel is responsible for 30% of the Meta ads sales, you can adjust the channel-level model to account for this breakdown. By repeating this process for other campaigns or funnel stages, you can expand the initial channel-level insights into more granular insights, helping to identify which parts of the funnel or campaigns are most effective.

The key benefit of using incrementality testing to obtain more granular insights is that it provides a more accurate understanding of which campaigns or funnel stages are truly driving results, allowing for better optimization of marketing spend. It also avoids overcomplicating the initial MMM by keeping the channel-level model intact and layering more granular insights on top. However, there are limitations, such as the need for controlled environments and enough data to perform reliable tests. Additionally, incrementality testing can be time-consuming, and the results may not always be generalizable across all campaigns or stages of the marketing funnel.

Increasing granularity with sub-modelling

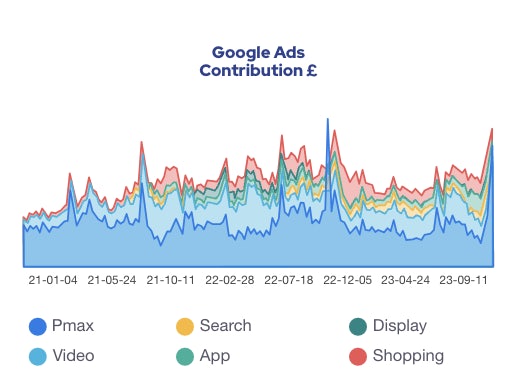

After running an initial channel-level MMM, you might want to expand the insights to understand how different campaigns or funnel stages within a channel contribute to overall performance. Another way to do this is by creating a sub-model that focuses specifically on campaign types or funnel levels, using data such as spend or impressions as input. For instance, if your MMM shows that Google ads drive significant sales, you could create a sub-model to determine how different campaign types within the Google ads channel contribute to this overall impact. Using channel spend or impression data at the campaign level, the sub-model estimates how much of the total contribution can be attributed to each level.

This sub-model can remain relatively simple because it doesn’t need to account for factors like seasonality, macroeconomics, or other externalities, as these have already been handled in the initial channel-level MMM. Instead, the sub-model focuses on breaking down the internal mechanics of the channel itself, isolating the effects of specific campaigns or funnel stages. For example, if Google ads account for 10% of sales, the sub-model might reveal that performance max campaigns contribute 7% of that, while display and video campaigns contribute the other 3%. This layered approach allows for granular insights without reintroducing the external complexities that have already been modelled.

The benefit of this method is that it allows for more granular insights without making the overall model overly complicated. It also leverages the broader MMM framework, ensuring that important external factors have been considered in the channel-level model before diving into more granular details. However, one limitation is that for a small number of campaign types, the sub-model could struggle to explain the contributions passed down from the initial model, as there may not be enough variation in the data to accurately distribute the impact across different campaigns. Additionally, since the sub-model is built on assumptions made in the initial MMM, any inaccuracies in the broader model could cascade down to the sub-level analysis.

Which approach should you take?

The approach you should take depends on the resources and time you have available. If you have the time and commitment to conduct frequent, well-controlled experiments, the incrementality testing approach is generally best practice. It provides the most accurate insights into the specific contributions of channels and campaigns by directly measuring their causal impact. However, if frequent testing isn’t feasible due to time constraints, budget limitations, or the complexity of managing these experiments, sub-modelling is a more practical alternative. By leveraging existing spend or impression data to estimate contributions within the channel, sub-modelling allows you to gain granular insights while avoiding the need for ongoing experimentation. While incrementality testing is ideal for precision, sub-modelling offers a more scalable solution when resources are limited.

In conclusion, expanding the granularity of your Marketing Mix Model beyond channel-level insights to capture campaign or funnel-specific contributions is essential for optimising marketing performance. Whether through incrementality testing or sub-modelling, both approaches offer valuable ways to dig deeper into your data without overwhelming your model with complexity. The key is to choose the method that best aligns with your resources and objectives. By taking the right approach, you can enhance the effectiveness of your marketing strategy and make more informed decisions.