What is model validation and why is it important?

Model validation is the process of evaluating a statistical model’s performance and confirming the model achieves its intended purpose. It involves a series of techniques and assessments to determine how well a model predicts outcomes using new data that were not part of the training set. This step is crucial and should not be overlooked because it provides confidence that the model will perform well on unseen data, thereby avoiding overfitting, where the model works well on training data but fails to generalise (or predict) to new data.

In the context of media effectiveness measurement, model validation is particularly important as it ensures that the insights derived from the model about the impact of various media channels are accurate and actionable. Without thorough validation, any decisions based on a statistical model could lead to ineffective or even detrimental marketing strategies, resulting in wasted resources and missed opportunities for marketing optimisation. Proper validation helps in identifying and correcting biases, improving model robustness, and ultimately driving more effective media planning and resource allocation.

Model validation techniques

When validating statistical models, several techniques can be employed to ensure the model performs well. One common technique is hold-out testing, which involves splitting the dataset into two distinct parts: a training set and a testing set. A common split would be 80:20, or 70:30. The model is then trained on the training set and evaluated on the testing set. This approach is straightforward and easy to implement, providing a clear and unbiased estimate of the model’s performance on new data. The main limitation of hold-out testing is that it may not utilise the data as efficiently as cross-validation, especially with small datasets, and the results can be highly dependent on how the data is split.

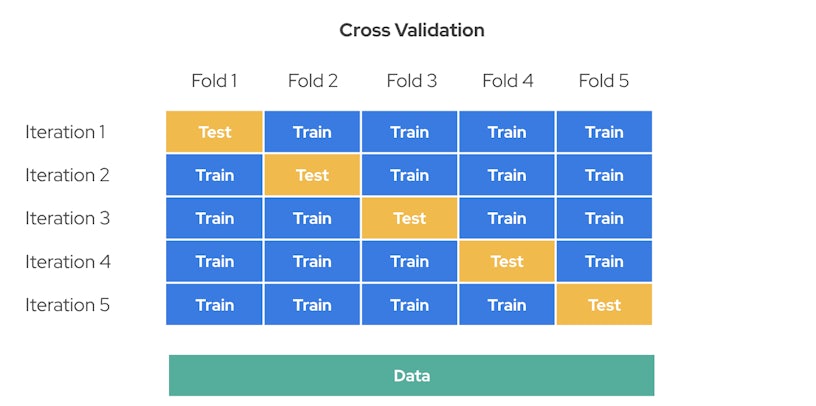

Another widely used technique is cross-validation. Cross-validation involves dividing the dataset into multiple subsets, or “folds”. The model is then trained on some folds while being tested on the other fold, a process that is repeated several times, with different folds used as the test set each time. This process is a similar concept to hold-out testing, but with the train/test split occurring at different places each iteration. This technique is particularly beneficial as it maximises the use of available data and provides a robust estimate of the model’s performance. However, it can be computationally expensive, especially with large datasets.

Each of these techniques has its benefits and limitations. Cross-validation offers a thorough validation process and maximises data use, but it can be computationally expensive. Hold-out testing is simple and unbiased but might not be efficient with smaller datasets. Choosing the appropriate validation technique depends on the specific context of the model and the available data, balancing between computational feasibility and the need for robust performance estimates.

Model validation in Marketing Mix Modelling

Model validation is vital in Marketing Mix Modelling (MMM) because it confirms that the model accurately represents the relationship between marketing activities and sales, ensuring that the insights derived are actionable and reliable. Proper validation helps in identifying any inaccuracies, providing a clear understanding of how different marketing tactics influence outcomes. For MMM specifically, cross-validation and hold-out testing are the most frequently used techniques due to their ability to test model robustness and prevent overfitting.

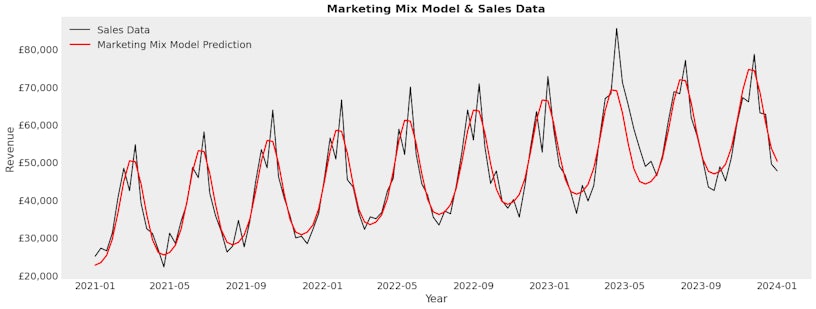

Goodness of fit is closely related to validation in statistical modelling. Goodness of fit measures how well a model’s predicted values match the observed data (visual above), serving as an initial check on model performance. Once the relevant goodness of fit checks are done, we introduce proper validation, testing the model’s performance on unseen data to ensure it generalises well beyond the sample used for estimation. Goodness of fit is therefore a key component of the broader validation process, ensuring the model is both accurate and generalizable, which is crucial for making reliable predictions and informed decisions in MMM.

Overall, be sure to ask (along with other essential questions) that your MMM vendor conducts these validations and goodness of fit techniques, as these methods provide you with confidence in your media investment decisions, ensuring that your marketing strategies developed are based on sound and validated models.

Model validation in Incrementality Testing

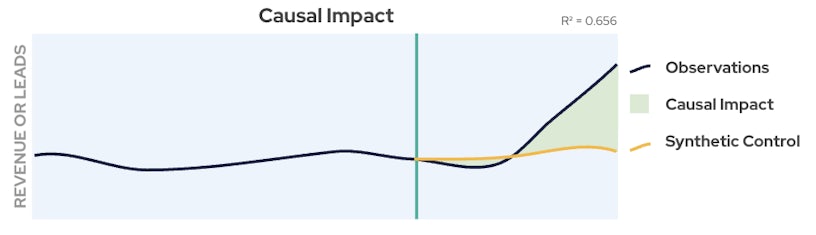

Model validation is crucial in incrementality testing as, especially in the case of synthetic control-based testing, the model predictions essentially determine the outcome. As mentioned in previous blogs, a synthetic control-based incrementality test boils down to comparing a “baseline” forecast (generated by a statistical model), in which the treatment is assumed absent, with the actual outcome. If the model doesn’t perform well on unseen data, the forecasts won’t accurately capture what would’ve happened in the absence of the treatment.

With regards to validation techniques, cross-validation and hold-out testing are powerful and frequently implemented methods of assessing the predictive accuracy of your synthetic control. To help measure forecasting accuracy, the calculation of the root-mean-square-error (RMSE) and residual analysis is recommended in addition to validation techniques. The RMSE is a measure of how far out, on average, our model predictions are when compared with historical train data. A small RMSE indicates good model performance. Additionally, residuals are defined as the difference between the predicted observations and the actual observations in the train data. Good model performance is implied when your residuals appear to be a sequence of random numbers, close to 0, that don’t follow any predictable pattern or trend over time. In other words, when your residuals closely mimic white noise.

Effective validation helps confirm that the observed lift or impact is truly due to the marketing intervention and not due to forecasting inaccuracies or other external factors. This validation is essential to provide confidence in the incrementality estimates, thereby enabling you to make informed decisions about future campaigns, channels and budget allocations.